Resolution & Compression

What is the resolution of the following 2 images?

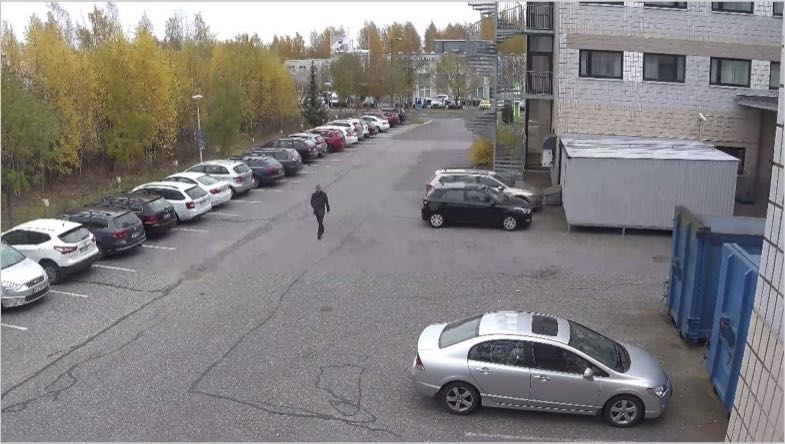

If we define the resolution as the frame size of the image, both images were taken at a resolution of 3840 x 2160 (4K). One was obtained by compressing the original image 64 times and the other by compressing 266 times.

If we define the resolution as the frame size of the image, both images were taken at a resolution of 3840 x 2160 (4K). One was obtained by compressing the original image 64 times and the other by compressing 266 times.

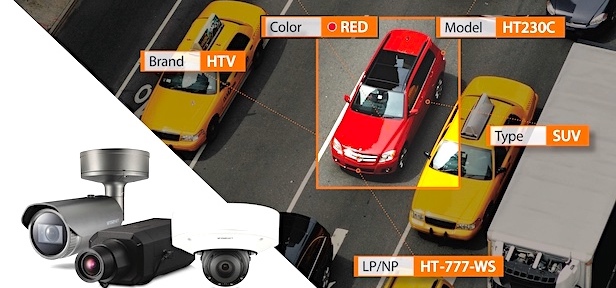

Since a large number of cameras are used in video security systems, bandwidth and storage space become the top priority in terms of system operation and economy. Therefore, in order to economize the system, companies can obtain lower bandwidth and storage space only with higher compression. Higher compression reveals the differences you can see in the next picture. Compressed images require less bandwidth and less storage space. But; At first glance, the difference between these images that appear to be the same will occur when we zoom in numerically. The image on the right is less compressed than on the left. Therefore, when enlarged numerically, the details can be seen. Compression reduces bandwidth and storage. However, it also reduces quality. In video security systems, the recorded video is 3% of the total recorded video. Important for these systems; can you get enough detail when you need an after-event image? To answer this question positively, it is essential to achieve the best quality with the lowest bandwidth.

The image on the right is less compressed than on the left. Therefore, when enlarged numerically, the details can be seen. Compression reduces bandwidth and storage. However, it also reduces quality. In video security systems, the recorded video is 3% of the total recorded video. Important for these systems; can you get enough detail when you need an after-event image? To answer this question positively, it is essential to achieve the best quality with the lowest bandwidth.

Game of Numbers

- Everyone started the number race to get the highest resolution and the lowest bandwidth. However, as the numbers really mean, system designs become increasingly difficult.

Camera factors affecting image resolution:

|

Transmission factors that affect image resolution:

|

Display factors that affect image resolution:

|

The best sensors, lenses, transmission systems, displays and computers to be used for monitoring are suitable for continuous operation and the best resolution for the resolution of the system produces the best results. While achieving these, it is necessary to prevent the system from becoming economic.

- Many manufacturers currently produce 4K resolution cameras. However, when you look at the lenses of the same cameras, you need to look for a lot to find the manufacturer that uses the lens that suits them. Generally 4K cameras use lenses with a resolution of 6MP or less.

- Sony is the only manufacturer to offer a 4K camera with quality lenses higher than 4K resolution (as of the date of publication).

What is the effect?

- The center of the lens produces the best image. So by attaching a good 3MP lens to a 10MP camera, the center can be nice and sharp, but when you look at the edges, the sharpness decreases.

- But there is a positive aspect of this… lower resolution = lower bandwidth…

PPM: Number of pixels per 1m

There is no limit to the distance that a security camera can see in the open area. As the distances extend, the gap between the objects decreases and becomes inseparable. This prevents the viewer from distinguishing. The important thing is the camera lens combination. For example; you can even observe the Jupiter if you connect any CS-framed camera behind a telescope. What is decisive here is how well the camera-lens combination can display the object or area the viewer wants to see. In visual security systems, the visibility of the subject or area is measured by the pixel value per meter. The type of camera and the focal length of the lens determine the field of view (FoV). The distance of the camera to the object to be displayed determines how many pixels the object will produce. For example; Assuming that we pull a car to cover the whole screen with a 2MP camera and lens combination, the car with an average width of 1.8m is placed in a 1920 pixel image. In this case, 1920 / 1.8 = 1.067mbp (ppm). In other words, 1,067 pixels were used to create a 1m image on the horizontal. This value is called mbp (pixels per meter) (ppm). Formula:

ppm= Horizontal Pixel Count ÷ Field of View Horizontal Length

The number of horizontal pixels in the above formula is the horizontal pixel value of the camera used (1920 pixels in a Full HD 1920x1080 camera, 3820 pixels in a 4K 3840x2160 camera). The horizontal field of view can be calculated. On average every camera and lens manufacturer has such calculators. The camera's sensor is also a factor in “Field of View” calculations. Therefore, it is useful to use manufacturers' calculators when calculating the field of view. Another illustrative example: a 2-megapixel camera with a 4-millimeter lens displays an area of 13.42x7.55m at 10 meters. The density of the full mbp (ppm) at 10 meters would be 1920 / 13.42 = 143mbp (ppm). At 5 meters, this value is 287mbp (ppm). 3. meter will be 477mbp (ppm).

|

278mbp (ppm) |

139mbp (ppm) |

69mbp (ppm) |

34mbp (ppm) |

|

|

|

|

The effect of ppm on the image is shown in the pictures below. The unit of ppm in feet is defined as ppf (pixel per foot). The calculation of ppm is theoretical and assumes that the camera gives the specified resolution (for example, 1920x1080). In particular, attaching a lens with lower resolution support to a high-resolution camera is one of the main reasons why the camera cannot produce the correct image. This causes the calculated mbp (ppm) value to not occur on the monitor.

DORI

- DETECT - (25ppm) Determining the presence and movement of a person

- OBSERVE - (63ppm) Observing what a person is doing

- RECOGNISE - (125ppm) Identifying a person - movement, clothing, behavior

- IDENTIFY - (250ppm) Identifying a person explicitly

DORI (Turkish for AGTB) is defined by the number of horizontal pixels per meter of image (mbp = ppm) and is derived from the EN-50132-7 standard.

DORI (Turkish for AGTB) is defined by the number of horizontal pixels per meter of image (mbp = ppm) and is derived from the EN-50132-7 standard.

IMAGE COMPRESSION

In full HD (1920 x 1080) the uncompressed image stream is 1.4Gbps. This bandwidth makes closed-circuit camera systems and remote monitoring systems unenforceable. With H.264 Compression, a bandwidth of 1 ~ 8 Mbps * is sufficient to achieve good image quality at 25 fps. Although H.264 compression includes cleverly designed coding techniques, it also causes a lot of data to be lost. MPEG-4 and H.264 use I and P frames to significantly reduce the amount of data required for video. In simple terms, I-frames are a full image compressed in a similar manner to JPEG photos, while P-frames only contain the differences between the I-frame and the next image. So if nothing changes in the image, the amount of data generated by the p-frames will be very small. However, the amount of data that P frames will generate in a dense moving scene can also be large.

MPEG-4 and H.264 use I and P frames to significantly reduce the amount of data required for video. In simple terms, I-frames are a full image compressed in a similar manner to JPEG photos, while P-frames only contain the differences between the I-frame and the next image. So if nothing changes in the image, the amount of data generated by the p-frames will be very small. However, the amount of data that P frames will generate in a dense moving scene can also be large.

Benefits of MPEG4, H.264 and H.265 Encodings

The amount of data that the video stream generates in variable bit rate encoding depends on the detail and activity in the image. More details and more movement increases bandwidth. In a corridor without gray motion, the amount of data in the p-frames is very low. On the other hand, the data amount of the p-frames that the moving camera in a shopping center will produce at the moment of movement will be very high. * Factors such as motion density in the image and using packet compression affect the amount of data. Based on this information, it can be said that high compression coding will provide great benefits in areas with little or no efficiency. However, when viewing a busy shopping mall or motorway junction, less compression may be preferred, where the captured details are important. In addition, higher compression encodings make frame-by-frame playback and reverse playback difficult for forensic analysis. Because frame-by-frame playback requires an I-frame, and as the I-frames extend, the details obtained from the monitored image will be reduced. Each compression system has benefits and allows more processing power more advanced encoding algorithms found in devices (such as cameras, recorders, encoders and decoders) over time. The point is that more processing power is needed in the encoder (camera) and decoder (client PC or decoders). When H.264 replaced MPEG4, many companies and organizations were slow to adopt the standard when they learned that client computers needed upgrading. The same applies to H.265 today. Below is a graphical representation of the operation.

Note: More complex coding requires more processing and therefore delay time is even longer.

INTELLIGENT CODING

Smart H.264 codecs are now available that optimize video compression based on content. The following techniques are used:

- Aggressive noise reduction in low-light scenes.

- Object detection to determine compression levels.

- Encoding the region of interest (RoI) - selecting the specific areas of an image and applying the compression level associated with each region.

These can significantly reduce bandwidth, but should be used with caution to make the user understand what they are looking at. Intelligent coding techniques reduce costs by reducing the number of cameras in an installation.

Many applications require high resolution, but only for selected areas of the image. The standard application is to use multiple cameras such as a fixed overview and a close camera or PTZ.

Sony 4K cameras offer intelligent cropping: A high-resolution camera delivers up to 5 streams (an overview, plus 4 cropped areas). Provides continuous overview and high resolution close-ups from a single camera.

Taken with 1 pcs SNC-VM772R.

Taken with 1 pcs SNC-VM772R.

INTELLIGENT CROPPING WITH MOTION DETECTION

Combining cropping and VMD technology, Sony provides dynamic cropping. While a general image stream is in progress, these areas can be broadcast with intelligent cropping, with simultaneous motion detection to 4 objects. On moving PTZ cameras, the automatic tracking system can only track one target and the overall viewing angle is lost during playback.

SUMMARY

It is important to understand the camera application before deciding which resolution and how much bandwidth to allocate.

- For Detect: Low resolution and low bandwidth are acceptable.

- For Observe: Medium resolution is suitable and bandwidth may be low.

- For Recognize: Higher resolution and less compression are required.

- For identification: High resolution and low compression are best.

Note: Please note that the resolution in ppm is inversely proportional to the distance from the camera. As the distance of the area or subject to be displayed from the camera increases, its resolution in ppm will decrease.

Applying real ppm with an image is the best way to identify and identify people in a real situation. From such experiments, you can accurately select the type of camera, lens and compression level. In addition, it is important to set up a structure that can change the camera profile at different times to reduce bandwidth and increase when more details are required when there is no movement. For example, in a warehouse, working hours, all cameras may require ”Detection”. However, “Detection” or “Monitoring dışında outside working hours will be sufficient for most cameras. Understanding the link between bandwidth and resolution in Video Security systems enables you to design systems economically and competitively. It reduces the budget that individuals and institutions will allocate for these applications. Naturally it contributes to the protection of the country's assets.

You can download the pdf version of this article from the link below.